In VMware’s latest Workstation 14 release, they’ve announced support for a new disk type: virtual NVMe. In Workstation’s release notes they mention this:

Virtual NVMe support Workstation 14 Pro introduces a new virtual NVMe storage controller for improved guest operating system performance on Host SSD drives and support for testing VMware vSAN. NVMe devices require virtual hardware version 13 / ESXi 6.5 compatibility and later.

Improved guest performance? I like the sound of that. I want to see this in action, so I set up a test environment.

I have a pretty beefy host machine that’s based on the Stack Overflow Developer Desktop Build. Here’s what we’re working with:

- Intel Core i7-6900K

- 64GB RAM (PC4-19200)

- Dual Samsung 960 PRO Series 512GB NVMe M.2. set up as a Windows striped volume (software RAID-0).

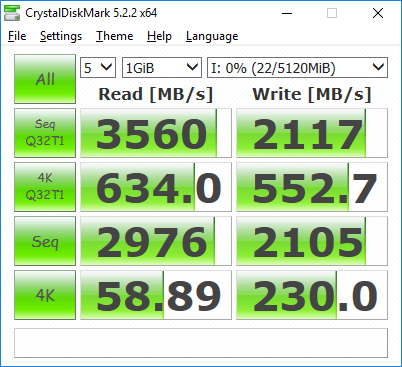

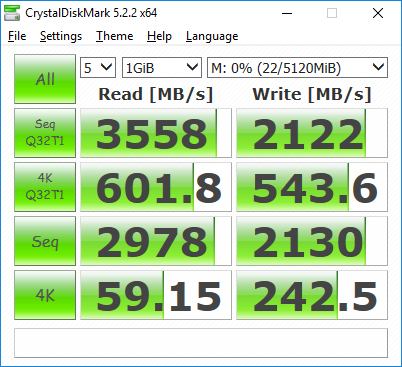

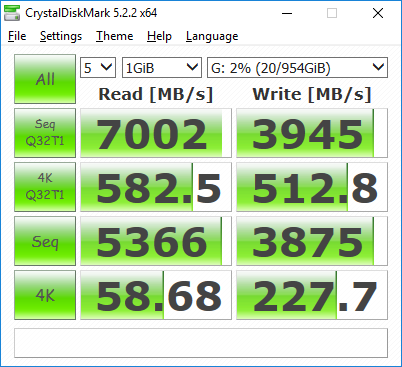

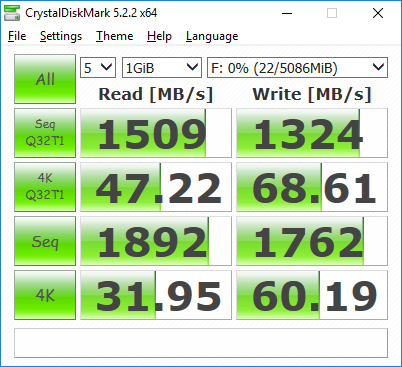

Here’s some baseline benchmarks on the host of both of the individual Samsung 960 PROs, as well as working together in a striped volume, using CrystalDiskMark.

-

Disk 1 960 PRO Performance Benchmark

Disk 2 960 PRO Performance Benchmark

Above you can see very similar, very fast results. Now let’s see them working together as a single striped volume.

Mainly we see a huge improvement in sequential operations and a minor reduction in random operations. Now that we have a baseline of what the underlying hardware on the host can handle, let’s see what happens when we run the same performance testing software, inside VMware Workstation 14 with the brand new virtual NVMe drives and the older (but still relevant!) SCSI drives.

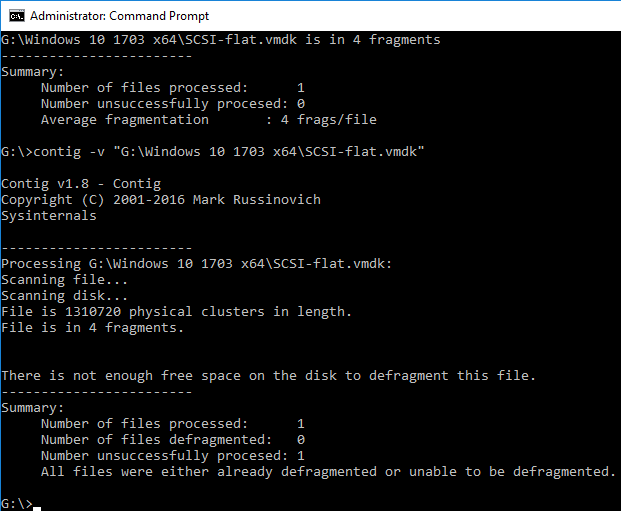

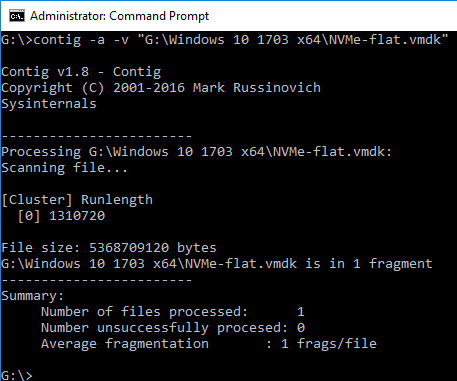

I created a brand new virtual machine running Windows 10 Pro 1703 build 15063.483. I created two 5GB disks separate from the operating system disk in VMware: SCSI and the new virtual NVMe, in a single file, preallocated, stored on a striped Windows volume backed by the dual 960 PROs from the tests above. Before booting the machine, I used contig to defragment the individual disk files so that both were in a single contiguous segment. Otherwise the benchmark could suffer due to split IOs, just because the disk files are in separate segments on the host disk.

I then booted into Windows and formatted both drives to NTFS immediately (not quick format). At this point I was ready to test the drives the drives. After running the tests a few times, here’s what I ended up with.

-

CrystalDiskMark benchmark VMware SCSI disk

-

CrystalDiskMark benchmark VMware NVMe disk

I don’t know about you but I’m slightly underwhelmed by the results. Consistently, arguably the most important benchmark (4k read w/ queue-depth of 32) is 37% slower using the brand new virtual NVMe drive. We do see a 32% increase in sequential read performance. I suppose it’s technically true that the virtual NVMe drives are faster than the SCSI drives, but the huge performance decrease in 4k random reads is a large enough turn-off for me to not upgrade my virtual drives.